Use cases for devnodeclean.exe

So what are use cases for devnodeclean.exe, and what is it? Windows creates an entry in the registry for every new device that is connected. That also goes for storage devices, including VSS shadow copies.

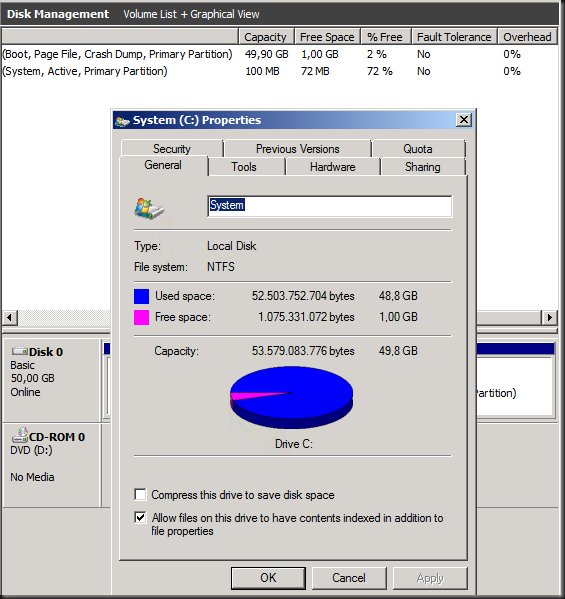

When you create a lot of VSS snapshots, both software (windows) or hardware (SAN) based ones that get mounted and unmounted this creates a lot of the registry entries. Normally these should get cleaned up by the process creating them. Microsoft can take care of their use cases but they cannot do this for 3rd party software as Windows cannot know the intent of that software. Hence you might end up with a registry system hive that starts to bloat. When that hive gets big enough you will notice slowdowns in shutdown and restarts. These slowdowns can become very long and even lead to failure to boot Windows.

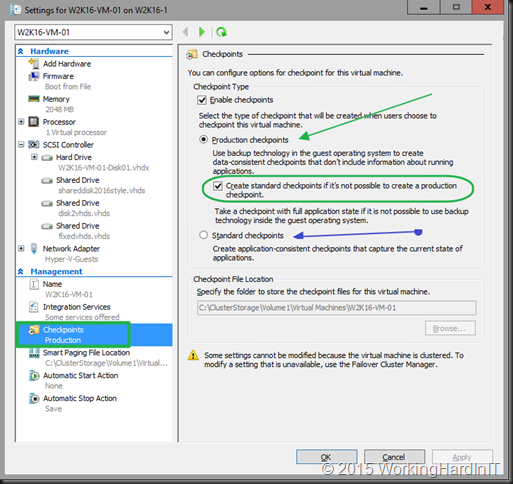

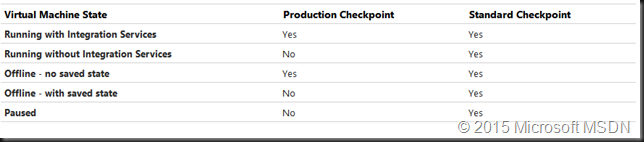

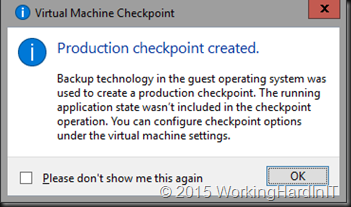

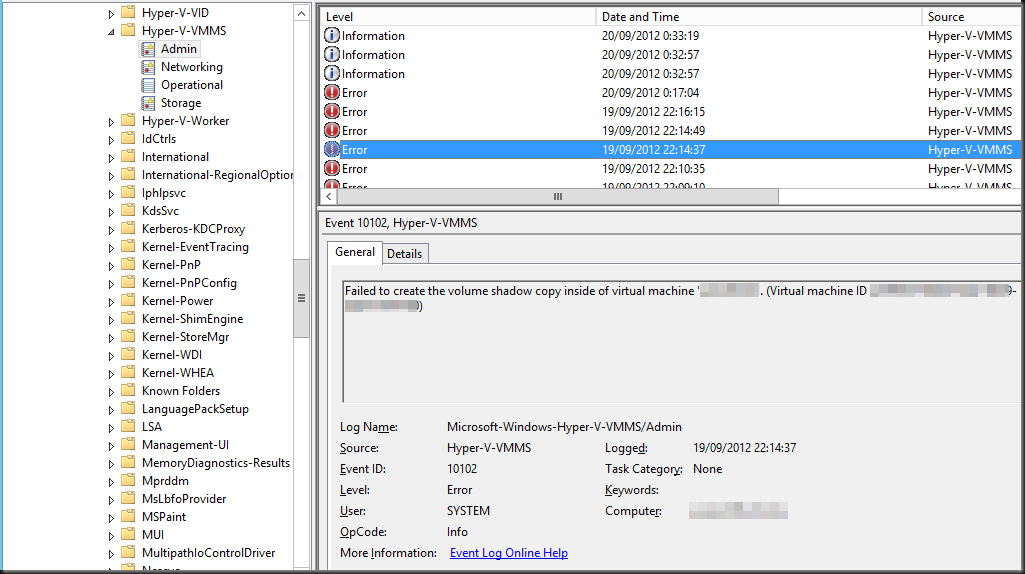

This can happen with SAN hardware VSS provider backup software or with a backup solution that integrates with SAN hardware VSS providers. Mind you it is not limited to hardware VSS providers. It can also happen with software VSS providers. Microsoft actually had this as a bug with Hyper-V backups a long time ago. A hotfix fixed the issue by removing the registry entries the backups created.

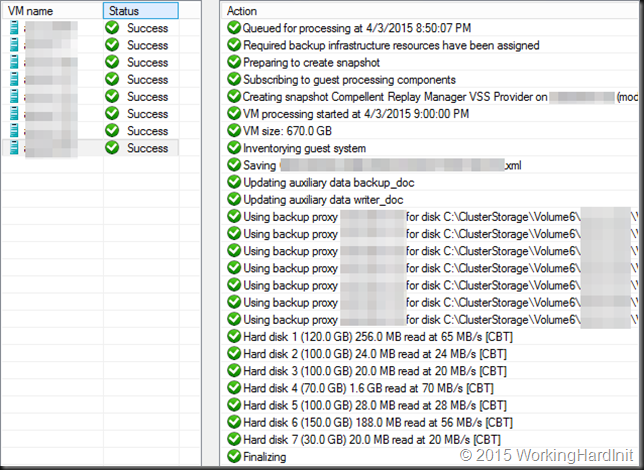

But not all software will do this. Not even today. The better software does, but even Veeam only provided this option in VBR 9.5 Update 4. Mind you, Veeam is only responsible for what they control via storage integrations. When you leverage an off-host proxy with Hyper-V Veeam collaborates with the hardware VSS provider but does not orchestrate the transportable snapshots itself. So in that case the clean up is the responsibility of the SAN vendor’s software.

Another use case I have is file servers on a SAN being backup and protected via hardware VSS snapshots with the SAN vendors software. That also leads to registry bloat.

I never had any issues as I clean up the phantom registry entries preemptively. Veeam actually published a KB article as well on this before they fixed it in their code.

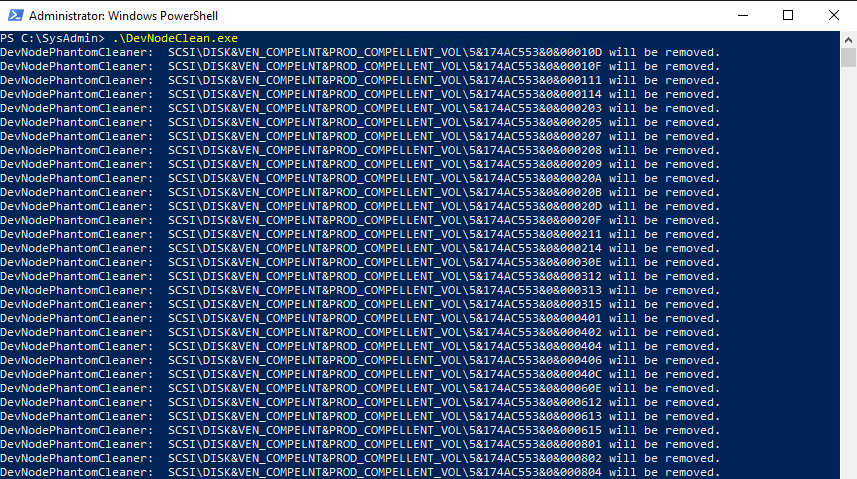

Still, if you need to clean up existing phantom registry entries you will need to use a tool call devnoceclean.exe.

Preventing registry bloat

When the software responsible doesn’t prevent registry bloat you will have to clean up the phantom registry entries in another way. Doing this manually is tedious and not practical. So, let’s forget about that option.

You can write your own code or script to take care of this issue. Cool if you can but realize you need to be very careful what you delete in the registry. Unless you really know your way around the depths of storage-related entries in the registry I suggest using a different approach, which I’ll discuss next.

Another solution is to use the Microsoft provided tool devnodeclean.exe. This tool is Microsft’s version of its example code you can find here How to remove registry information for devices that will never be used again

You can download that tool here. Extract it and grab the .exe that fits your OS architecture, x86, or x64 bit. I usually put in the subfolder Bin under C:\SysAdmin\Tools\DevNodeClean\ where I also create a subfolder named Logs. Remember you need to run this with elevated permissions. devnodeclean.exe /n list the entries it will remove while just running it without a switch actually removes the entries. It will work with Windows Server 2012(R2), 2016, and 2019. It can take a while if you have many thousands of entries.

While you can run the tool manually in “one-off” situations normally you’ll want to run it automatically and regularly. For that, I use a PowerShell script that logs the actions and I use Task Scheduler to run it every day or week. that depends on the workload on that host.

Sample Code

Below is some sample code to get you started.

$TimeStamp = $(((get-date)).ToString("yyyyMMddTHHmmss"))

$PathToDevNodeClean = 'C:\SysAdmin\Tools\DevNodeClean'

Start-Transcript -Path "$PathToDevNodeClean\Logs\DevNodeCleanLog-$TimeStamp.txt"

Write-Output get-date ': Starting registry cleanup of phantom VSS entries'

Invoke-Expression "$PathToDevNodeClean\Bin\DevNodeClean.exe"

Write-Output get-date 'Cleaning up old log files'

$DaysToRetain = 0

$DateTime = ((Get-Date).AddDays(-$DaysToRetain))

$AllLogFilesInDevNodeClean = Get-ChildItem -Path "$PathToDevNodeClean\Logs" -Filter "DevNodeCleanLog-*.txt" -Force -File | Where-Object { $_.CreationTime -lt $DateTime }

foreach ($File in $AllLogFilesInDevNodeClean) {

$FileName = $File.FullName

$TimeStamp = Get-Date

try {

Remove-Item -Path $FileName -ErrorAction SilentlyContinue

Write-Output "$TimeStamp > Deleting file $FileName because it was created before $DateTime"

}

catch {

Write-Output "$TimeStamp > Failed to delete $FileName It is probably in use"

Write-Output $TimeStamp $_.Exception.Message

}

finally {

}

}

Stop-Transcript

Good luck with your devnodeclean.exe adventures. As with any sample code, big boy rules apply, use it at your own risk and test before letting this lose on your production systems.

This is just one example of how my long time experience with Windows storage and backups prevents problems in environments I manage or design. If you need help or have a question, reach out and we’ll try to help.

![clip_image001[8] clip_image001[8]](https://blog.workinghardinit.work/wp-content/uploads/2012/09/clip_image0018_thumb.png)