Introduction

You’ll find the following recommendations on line about optimizing Live Migrations:

- Use bigger pipes (10Gbps is better than 1Gbps)

- Enable Jumbo Frames

- Up the Receive Buffer to 8192 (Exchange 2010 virtualization recommendation for Live Migration)

As we’ve been building Hyper-V Cluster since the early betas let me share some experiences with this. For the curious I used Intel® Ethernet X520 SFP+ Direct Attach Server Adapters & DELL PowerConnect 8024F 10Gbps switches for my testing. See my blog posts on considerations about the use of 10Gbps in Hyper-V clusters here:

- Introducing 10Gbps Networking In Your Hyper-V Failover Cluster Environment (Part 1/4)

- Introducing 10Gbps With A Dedicated CSV & Live Migration Network (Part 2/4)

- Introducing 10Gbps & Thoughts On Network High Availability For Hyper-V (Part 3/4)

- Introducing 10Gbps & Integrating It Into Your Network Infrastructure (Part 4/4)

Bigger pipes are better

On bigger pipes I can only say that if you can afford them and need them you should get them. End of discussion.

Jumbo frames rock

Jumbo frames help out a lot (+/- 20 %), especially with the larger memory virtual machines.

The golden nugget

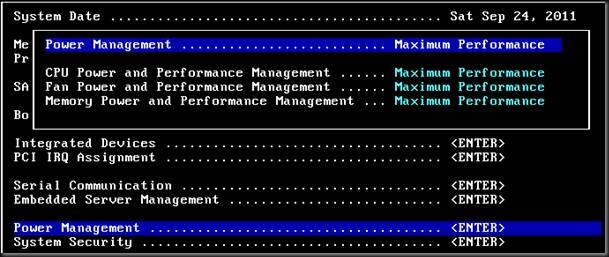

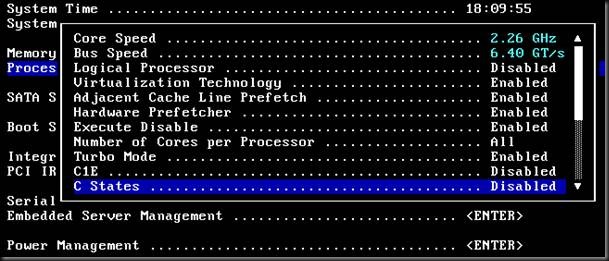

So far so good, but there is one golden nugget of information I want to share. There is little trip wire that can prevent you from getting your optimal performance. Advanced power settings in the BIOS. If you read my blogs you might have come across a blog post Consider CPU Power Optimization Versus Performance When Virtualizing and I encourage you to go and read that post as it holds a lot of good info but also is very relevant to this post. Because we have yet another reason to make sure your BIOS is set right to achieve a decent return on investment in quality hardware.

In our experience those power saving settings, the C states and the C1 states are also not very helpful when it comes to Live Migration & such. I got from a meager 20% bandwidth use all the way up to 35-45% at best with jumbo frames enabled and the power settings set to ”Full Power”. A lot better but still not very impressive.

Now go ahead and disable the C states AND the C1E state to achieve 55% to 65%.

Now the speed of a live migration varies greatly between virtual machines that are idle or running a full load, both CPU & memory wise. It also depends on the load the host you’re migrating from and to, but this impact is less when you disable those advance CPU power settings.

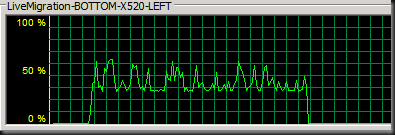

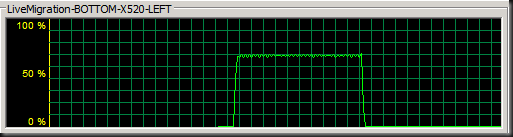

Look at the following screen shots

A SQL Server with 50GB of RAM being live migrated over 10Gbps. Jumbo frames enabled, Power Settings optimized but with C1E & C States enabled.

A SQL Server with 50GB of RAM being live migrated over 10Gbps. Jumbo frames enabled, Power Settings optimized but with C1E & C States disabled.

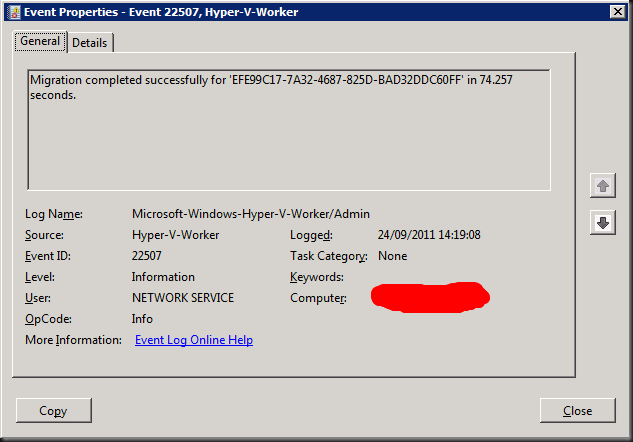

The live migration of this virtual SQL Server takes between 74-78 seconds. Not bad!

By the way these settings also help with 1Gbps but there is isn’t as spectacular. You use 99% instead of 75-80% of you bandwidth. And improvement yes, but not on the same scale as with 10Gbps for speeding up Live Migrations.

As you can see in this post on the TechNet support groups, this seems to be a common occurrence. It’s not just me who’s seeing things: Live Migration on 10GbE only 16%. even Dell chimed in there confirming these findings in their labs.

Receive Buffer

There is one setting that’s been advised for Exchange 2010 virtualization with Hyper-V that I have not seen improve speeds and that’s upping the Receive Buffer 8192. You can read this in Best Practices for Virtualizing Exchange Server 2010 with Windows Server® 2008 R2 Hyper V™. In some cases I tested this even reduces the results, especially when you have C1E & C states enabled. It is also a confusing recommendation as they state to set the Receive Buffer to 8192 .This value however is dependent on the NIC type and driver so you might only be able to set it to 4096 or so. The guidance should state to set it as high a possible but I have not seen any benefits. Do mind that I did not test this with a Hyper-V cluster running a virtualized Exchange 2010 guest. Your mileage may vary. Trust but verify is the age old adagio. Also keep in mind I’m running 10Gbps, so the effect of this setting might be not be what it could do for a 1Gbps network, but on the whole I’m not convinced. If you implement all other recommendations you’ll saturate a 1Gbps already.

What does this mean?

The sad news is that in virtual environments or other high performance configurations the penguins have to give way to performance. I wish it was different but unfortunately it isn’t.

By the way, this is vendor agnostic. You’ll see this with HP, DELL, CISCO in all form factors whether they are tower, rack or blade servers. The main thing you need to make sure is that the BIOS allows you to disable the C States en power settings. Not all vendors/BIOS version allow for this I read so make sure you check this. Some CISCO blades have annoying on this front, ruining the performance of VDI projects with less than optimal CPU performance but they have released an updated BIOS now to fix this.

Look, it makes no sense saving on power if it means you’ll by more servers to compensate for the lack of performance per unit. In my honest opinion a lot of all the hardware optimizations are awesome but they still have a long way to go in making sure it doesn’t incur such a hit even on performance. Right sizing servers in number & type of CPU, power supplies etc. still seems the best way to avoid waiting energy and money. Buying more power than needed and counting on the power consumption optimizations to reduce operating cost can be a good idea to protecting your investment for expected future increases in resource demand within the service life of your hardware. On average that is 3 to 5 years depending on the environment & needs.

Conclusion

Three things are needed for lightning fast Live Migrations:

- Bandwidth. Hence the 10Gbps network. There is no substitute for bigger pipes.

- Jumbo Frames. Configure them right & you’ll reap the benefits

- Disable C1E& C states. Also Configure your servers power options for maximum performance.

- I have not been able to confirm the receive buffer has a big impact on Live Migration speed or does any good at all. Test this to find out if it works for you

Remember that you’ll be able to do multiple Live Migrations in parallel with Windows 8. So a 10Gbps pipe will be used at full capacity then. Being able to use more networks for Live Migration will only increase the capability to evacuate a host fast or to move virtual machines for load balancing across a cluster. If you look at the RDMA, infiniband, 40/100Gbps evolutions becoming available in the next 12 to 36 months 10Gbps will become a lot more mainstream while at the same time the options for network connectivity will become more diversified. 10Gbps prices are dropping but for the moment they do remain high enough to keep people away.