Prologue

On gloomy day, it was dark, grey and cold, we gave battle with RoCE & DCB (PFC/ETS). The fight was a long one, the battle field uncharted and we had only our veteran attitude towards adversity to guide us through the switch configurations. It seemed that no man had gone that far to the edges of the Windows Server 2012 empire. And when it came to RoCE & DCB meets Didier, I needed to show it that it had been conquered and was remembered of a quote in Gladiator:

Quintus: People RoCE/DCB configs should know when they are conquered.

Maximus: Would you, Quintus? Would I?

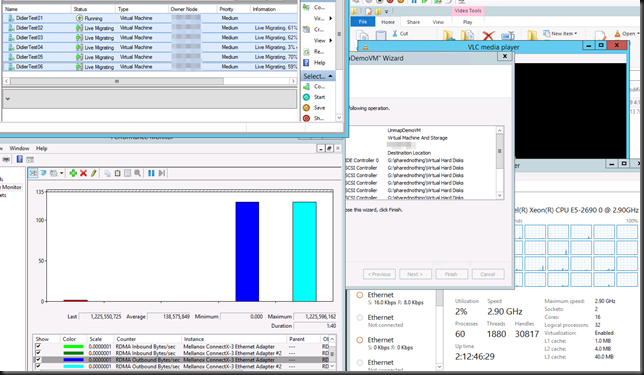

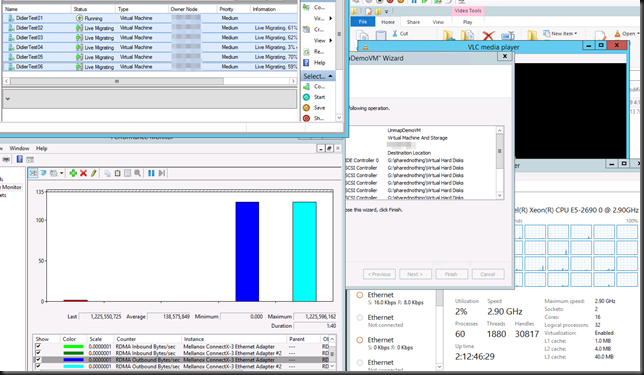

After many, many lonely & unsuccessful hours dealing with Performance monitor, switch configurations, reloads, firmware, drivers & Windows we got results:

… “it’s working” … “holey s* look at those numbers” …

On that dark day in a scarcely illuminated room, in the faint glare of the monitors even the CLI of the switches in PUTTY felt like a grim cold place. But all that changed at as the impressive results brightened up the day and made all efforts seem worthwhile. “Didier Victor” I thought as I looked away from the screen, ‘”Once more”.

But it has been a hard won victory. And should you fight this battle? We’ll let’s discuss this a bit now we’ve got your attention. RDMA is a learning process for many of us and neither Infiniband, iWARP or RoCE are the one that need to win at this game. It’s you, via the knowledge you’ll gain working with RDMA technologies.

SMB Direct or SMB over RDMA comes in flavors

Infiniband (Mellanox)

That’s been here for a while. Has high cost associated (depends on where you come from) and also has a psychological barrier to it. Try discussing buying 10Gbps versus Infiniband with semi technical managerial types. You’ll know what I mean.

Deploying Windows Server 2012 with SMB Direct (SMB over RDMA) and the Mellanox ConnectX-2/ConnectX-3 using InfiniBand – Step by Step

iWARP (Chelsio / Intel)

RDMA but it’s TCP/IP offloaded to the card. It can leverage DCB but doesn’t require it.

Deploying Windows Server 2012 with SMB Direct (SMB over RDMA) and the Chelsio T4 cards using iWARP – Step by Step

RoCE (Mellanox)

“Infiniband over Ethernet” > so you “NEED” (no not a real hard requirement) DBC with PFC/ETS (DCBx can be handy) for it to work best. No need for Congestion Notification as it’s for TCP/IP but could be nice with iWARP (see above). Do note that you’ll need to configure your switches for DCB & that’s highly dependent on the vendor & even type of switches.

Deploying Windows Server 2012 with SMB Direct (SMB over RDMA) and the Mellanox ConnectX-3 using 10GbE/40GbE RoCE – Step by Step

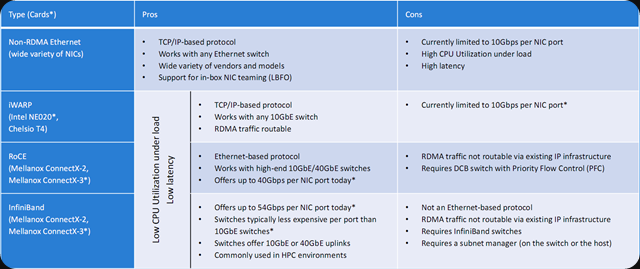

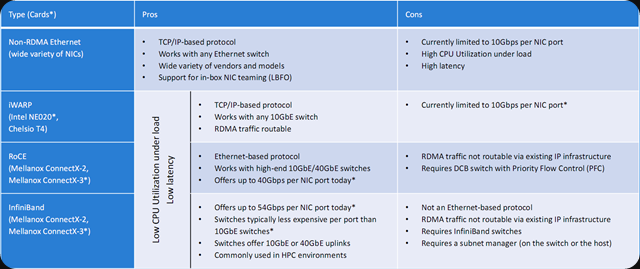

Here’s an older overview of RDMA flavor’s pros & cons:

Please see Jose Barreto’s excellent work on explaining SMB 3.0 over RDMA in his presentations at SNIA, TechEd and on his blog.

While I have heard of two people I have in my network working with Infiniband for SMB Direct and Windows Server 2012 (R2) most of us are doing 10Gbps. Pricing for Infiniband has a bad reputation. Not because Infiniband is super costly compared to 10/40Gbps (I’m told by most people who ask quotes are positively surprised) but when you can’t afford a Porsche you’re not shopping for a Ferrari either. Especially not when a mid size sedan will serve al of your needs above and beyond the call of duty. On top of that you might have bought all that nice “converged network ready” 10Gbps gear some years ago. Some of us may be working towards 40Gbps but most are 10Gbps shops. My 40Gbps is “limited” to the inter links & uplinks. Meaning that we either go for iWarp or RoCE.

RoCE or iWARP

Which one is best of those two? Well, as the line is drawn between vendors. RoCE today equals Mellanox (yes the Infiniband vendor, RoCE is sometimes called “Infiniband on layer 4 over Ethernet layer 2”) and iWarp means Chelsio or Intel (their cards look a bit old in the teeth however).

You’ll find comparisons by both vendors claiming superiority for varied reasons. Here’s the Mellanox side http://www.mellanox.com/pdf/whitepapers/WP_RoCE_vs_iWARP.pdf & here’s Chelsio’s take http://www.chelsio.com/roce/ & http://www.moderntech.com.hk/sites/default/files/whitepaper/V09_iWAR_Summary_WP_0.pdf. It’s good to look at your needs and map them. But I cannot declare a winner. I did notice that at least one vendor of SOFS/CiB uses iWarp. Is that a statement? And if so about what? Price? Easy of use? Perfomance/Cost?

What I do find is that Chelsio is really hacking into RoCE as you can see here http://www.chelsio.com/wp-content/uploads/2011/05/RoCE-The-Grand-Experiment1.pdf, http://www.chelsio.com/roce-whitepaper/, http://www.chelsio.com/wp-content/uploads/2011/05/RoCE-FAQ-1204121.pdf So that begs the question are the right or are the scared of RoCE, as the Infiniband boys are out to eat their lunch?

My take on this for now

iWarp is way easier to get started. That’s for sure. RoCE is firmware sensitive (NIC, Switches), driver sensitive (NIC). Configuring your switches (DCB) now is usually followed by a rebooting that switch (so you might not do that so easily in production and depending on where in the stack those switches live you really need to Force10 VLT or Cisco vPC, Arista MLAG or a independent redundant switches to get away with it. RoCE loves green field. Stacking I hear you say? I don’t like stacking on that spot of the stack as firmware updates will get you to suffer through a single point of failure.

Disclaimer: RoCE in itself does not DEMAND/REQUIRE DCB but the consensus is that it will work better, especially under heavy load. Weather SMB Direct over RoCE requires DCB is another question. For all practical purposes I’m working from the prerequisite it does for a production environment. But as you can do RoCE RDMA between to NIC with no DCB switch in between this indicates that the hard requirement for DCB is not there. Mind you not using DCB might not be smart in regards to QoS & error handling (no TCP/IP goodness handling this for you). But I’m no expert on this subject. Paul Grun however is and he’s involved with RoCE at https://www.openfabrics.org/component/search/?searchword=Paul+grun&ordering=&searchphrase=all They tend to know their stuff. Read some of the comments below this article and you’ll know a lot http://www.hpcwire.com/hpcwire/2010-04-22/roce_an_ethernet-infiniband_love_story.html But PFC isn’t Walhalla either and some claim you can just forget about it and build non blocking networks. I guess you could if your pockets are deep enough  . And you might go a very long way without the need for RDMA. Many do … and when you talk to some network people & vendors they can’t agree either as everyone is on the same learning curve but from a different perspective. There is no one size fits all & it all depends.

. And you might go a very long way without the need for RDMA. Many do … and when you talk to some network people & vendors they can’t agree either as everyone is on the same learning curve but from a different perspective. There is no one size fits all & it all depends.

iWarp doesn’t require DCB so you can get away with cheaper switches. Or, not so cheap switches that don’t support DCB (choose wisely). So cheaper switches is probably true on the low end. But, even very economically priced switches from DELL have good DCB support. Some other vendors who are more expensive don’t.

DCB is uncharted terrain for SMB Direct purposes & new to many for us. So if you want to do RDMA the easy way … go iWARP. As said, the use of DCB for PFC/ETS is not mandatory in that case, you’ll get great results and it’s easy. Mind you, you’ll still be dabbling with DCB if you want to do lossless magic in the switches  . Why you say? Well, that “converged network” story makes it kind of interesting to do so and PFC, DCBx/TLV is generic and can be leveraged for other things than iSCSI or FCoE. And for all practical purposes SMB 3.0 with SMB Direct is a storage protocol since Windows Server 2012 made it so (CSV). Or do you do DCB for iSCSI/FCoE & iWarp for SMB Direct? After all there’s only 2 lossless queues to be had. But hey how many do you need? Choices, choices and no vast pool of experienced practitioners yet.

. Why you say? Well, that “converged network” story makes it kind of interesting to do so and PFC, DCBx/TLV is generic and can be leveraged for other things than iSCSI or FCoE. And for all practical purposes SMB 3.0 with SMB Direct is a storage protocol since Windows Server 2012 made it so (CSV). Or do you do DCB for iSCSI/FCoE & iWarp for SMB Direct? After all there’s only 2 lossless queues to be had. But hey how many do you need? Choices, choices and no vast pool of experienced practitioners yet.

iWARP routes, it’s not bound by a single Ethernet broadcast domain. That could be useful info depending on your environment & needs. I’ll note that I leverage RDMA for East-West traffic, not north south & as such this could not be an issue. The time that I do “Shared Nothing Live Migration" from on premise to the cloud has not arrived yet.

The Mellanox cards in my neck of the woods were 35% cheaper than Chelsio (SFP+)

What about the scalability? “iWarp doesn’t scale that well” is stated left and right but I think that might often be based on older information. Chelsio makes a strong case for iWARP scalability. Especially when it comes to long distances, multiple hops & routing.

Again, your mileage may vary. But for “the smaller environments” who want to leverage RDMA with SMB 3.0 I’d say that iWarp is the easiest path to go & will do just fine. Now if you’re already into lossless Ethernet for iSCSI or working with FCoE you might have all the hardware you need & the experience to deal with DCB. The latter might not always be true however. Most people have Lossless Ethernet for iSCSI or FCoE set up by the vendor or consultants who’ll use well defined step by step guides. These do not exist for the RoCE variant of SMB 3.0 over RDMA.

The case for RoCE can be made as well. Some claim that high volume of connections consumes memory when using iWARP and TCP’s flow and reliability controls are less suited for large-scale datacenters & cloud deployments due to performance issues. Where iWARP does not know multicast, RoCE does and that could be important to you.

So why did I or still do RoCE?

So why did I walk the walk? Basically because just talking the talk isn’t enough. We considered it an investment in our education. DCB is not going away (the abstraction isn’t their yet and won’t be for a while) and we need to gain knowledge of it to both handle it and make informed decisions. By the way once you go to lossless you might leverage DCB/PFC with iWarp as well just like you do for iSCSI to make it lossless (leveraging DCBx/TLV). Keep in mind that DCB is key in converged networking and as such deserves your attention. That’s why I chose not to avoid it but gave battle. DCB is all over the place when it comes to converged networking (iSCSI, FCoE), so we need to learn the good, the bad and the ugly. Until that day that perhaps, the hardware stack is that good, powerful & has so much bandwidth TCP/IP never needs it built in protection for packet loss. Hmmmmmm, I remember people saying that about 10Gbps, but then they wanted to send everything over 2*10Gbps pipes and it becomes an issue again?

It’s early days yet but you have to give credit to Microsoft for getting RDMA/DCB on the radar screen of the worlds virtualization & storage admins than ever before. It’s not a well established segment yet and it will be interesting to see how this all turns out. I do know that now that I’ve figured out a thing or two about RoCE, I won’t be intimidated & won’t make choices out of fear. And do remember that if you have plenty of idle CPU cycles & 10Gbps you might not even need RDMA. The value for me and my employers is the knowledge gained. DCB has it’s role to play but we’ll leverage iWARP or RoCE without a preference. Today you have 2 choices. RoCE is the newer one while iWarp has been around longer and both have avid proponents it seems.

I know one thing. If you need or want RDMA in any existing 10Gbps environment with minimal effort & no risk to existing switch infrastructure, you’ll use iWarp it seems.

Epilogue

You sit there staring at a truckload of VMs with 120GB of memory assigned in total being evacuated in +/- 70 seconds seconds, while doing a Shared Nothing Live Migration between the same hosts and without consuming CPU load … and have DCB for SMB 3.0 running on your switches … Yes!

Remember, “What we do in life, echo’s in eternity”  You might think now that I’m a bit nutty, but I assure you that in my quest to find someone who had hands on experience configuring DCB on switches for SMB Direct with RoCE I had to turn to myself as no one seems to have done it. I’ll be sharing more info on our setup and configurations in the future. Once you wrap your head around the concepts, you understand why things are done and how. There in lies the value for me.

You might think now that I’m a bit nutty, but I assure you that in my quest to find someone who had hands on experience configuring DCB on switches for SMB Direct with RoCE I had to turn to myself as no one seems to have done it. I’ll be sharing more info on our setup and configurations in the future. Once you wrap your head around the concepts, you understand why things are done and how. There in lies the value for me.

![]() . Too early to tell.

. Too early to tell.