I was working on a migration of a nice two node Windows Server 2012 Hyper-V cluster to Windows Server 2012 R2. The cluster consist out of 2 DELL R610 servers and a DELL MD3200 shared SAS disk array for the shared storage. It runs all the virtual machines with infrastructure roles etc. It’s a Cluster In A Box like set up. This has been doing just fine for 18 months but the need for features in Windows Server 2012 R2 became too much to resists. As the hardware needs to be recuperated and we have a maintenance windows we use the copy cluster roles scenario that we have used so many times before with great success. It’s the Perform an in-place migration involving only two servers scenario documented on TechNet and as described in one of my previous blogs Migrating a Hyper-V Cluster to Windows 2012 R2 for your convenience.

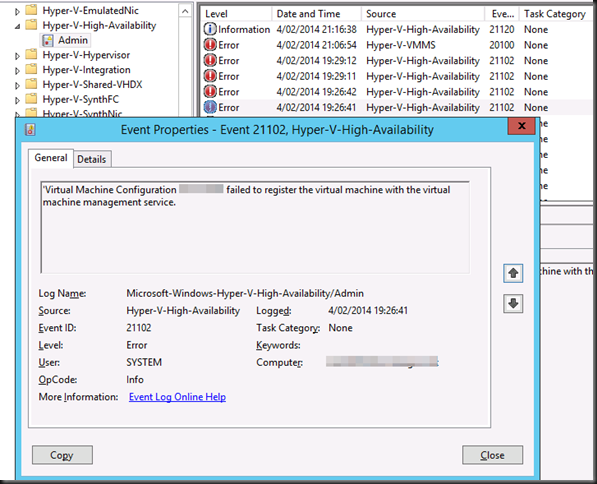

Virtual Machine Configuration ‘VM01’ failed to register the virtual machine with the virtual machine service

As the source host was running on Windows Server 2012 we could have done the live migration scenario but the down time would be minimal and there is a maintenance window. So we chose this path.

So we performed a good health check. of the source cluster and made sure we had no snapshots left hanging around. Yes it’s supported now for this migration scenario but I like to have as few moving parts as possible during a migration.

It all went smooth like silk. After shutting down the VMs on the source cluster node, bringing the CSV off line (and un-presenting the LUN from the source node for good measure), we present that LUN to the target host. We brought the CSV on line and when that was completed successfully we were ready to bring the virtual machines on line and that failed …

Log Name: Microsoft-Windows-Hyper-V-High-Availability-Admin

Source: Microsoft-Windows-Hyper-V-High-Availability

Date: 4/02/2014 19:26:41

Event ID: 21102

Task Category: None

Level: Error

Keywords:

User: SYSTEM

Computer: VM01.domain.be

Description:

‘Virtual Machine Configuration VM01’ failed to register the virtual machine with the virtual machine management service.

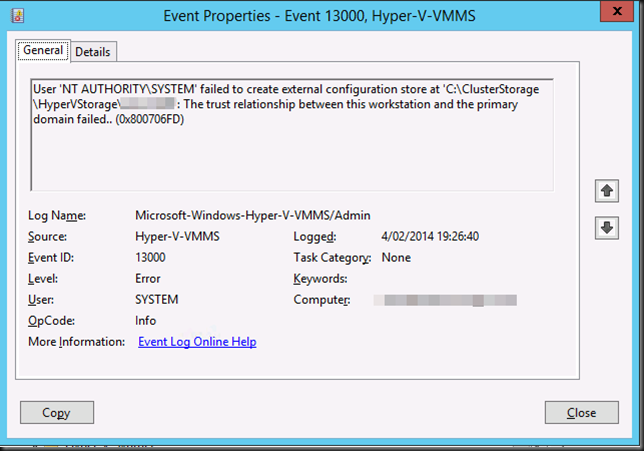

Let’s dive into the other event logs. On the host the application security and system event log are squeaky clean. The Hyper-V event logs are pretty empty or clean to except for these events in the Hyper-V-VMMS Admin log.

Log Name: Microsoft-Windows-Hyper-V-VMMS-Admin

Source: Microsoft-Windows-Hyper-V-VMMS

Date: 4/02/2014 19:26:40

Event ID: 13000

Task Category: None

Level: Error

Keywords:

User: SYSTEM

Computer: VM01.domain.be

Description:

User ‘NT AUTHORITYSYSTEM’ failed to create external configuration store at ‘C:ClusterStorageHyperVStorageVM01’: The trust relationship between this workstation and the primary domain failed.. (0x800706FD)

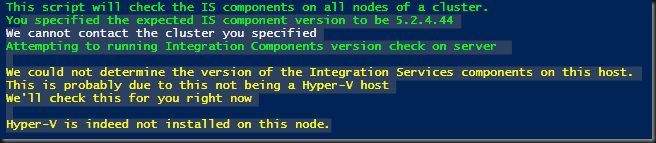

Bingo. It must be the fact that no domain controller is available. It’s completely self contained cluster and both domain controller virtual machines are highly available and reside on the CSV. Now the CSV does come on line without a DC since Windows Server 2012 so that’s not the issue. it’s the process of registering the VMs that fails without a DC in an Active Directory environment.

Getting passed this issue

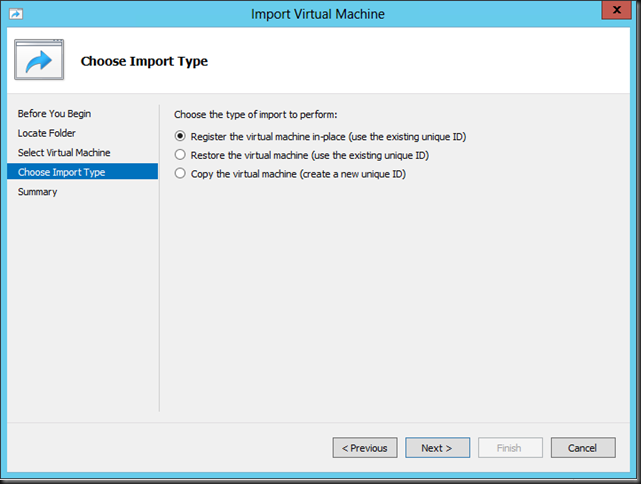

There are multiple ways to resolve this and move ahead with our cluster migration. As the environment is still fully functional on the source cluster I just removed a DC virtual machine from high availability on the cluster. I shut it down and exported it. I than copied it over to the node of the new cluster (we’re going to nuke the source host afterwards and install W2K12R2, so we moved it to the new host where it could stay) where I put it on local storage and imported it. For this is used the “Register the virtual machine in-place option”. I did not make it high available.

After verifying that we could ping the DC and it was up and running well we tried the final phase of the migration again. It went as smooth as we have come to expect!

Other options would have been to host the DC virtual machine on a laptop or other server. If you could no longer get to the the DC for export & import or heck even a shared nothing migration depending on your environment can help you out of this pickle. A restore from backup would also work. But here in that 2 node all in one cluster our approach was fast and efficient.

So there you go. Tip to remember. Virtualizing domain controllers is fully supported, no worries there but you need to make sure that if you have a dependency on a DC you don’t have the DC depending on that dependency. It’s chicken an egg thing.