While speaking (What’s new in Failover Clustering in Windows Server 2012 R2) and attending the Microsoft Technical Summit 2014 I’m taking the opportunity to see how Microsoft Germany and partners are doing a workshop which is based on the IT Camps they have been delivering over the past year. There is a lot of content to be delivered and both trainers Carsten Rachfahl (Rachfahl IT-Solutions GmbH) and Bernhard Frank (Partner Technology Strategist (Hosting), Microsoft) are doing that magnificently.

One thing I note is that they sure do put in a lot of effort. The one I’m attending requires some server infrastructure, a couple of switches, cabling for over 50 laptops etc. These have been neatly packed into road cases and the 50+ laptops had been placed, cabled and deployed using PXE boot /WDS the night before. Yes even in the era of cloud you need hardware especially if you’re doing an IT Camp on “Datacenter Modernization” (think private & hybrid infrastructure design and deployment).

Not bypassing this aspect of private cloud building adds value to the workshop and is made possible with the help of Wortmann AG. Yes the attendees get to deploy storage spaces, Scale Out File Server, networking etc. They don’t abstract any of the underlying technologies away, I like that a lot, it adds value and realism.

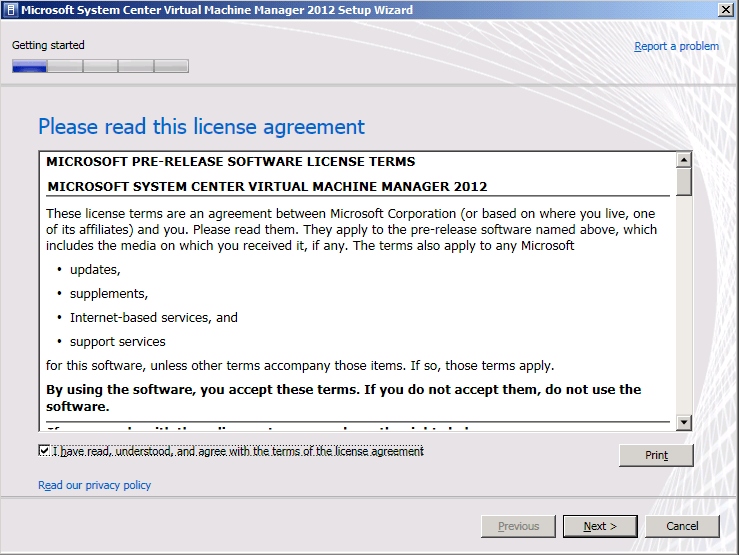

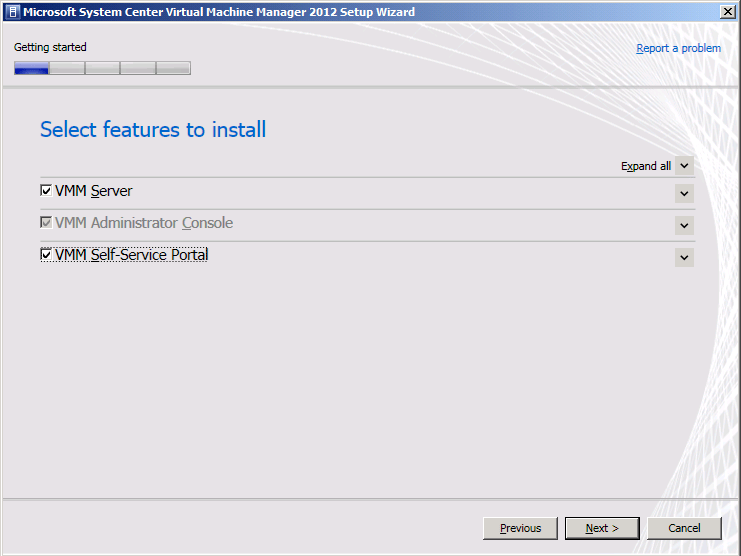

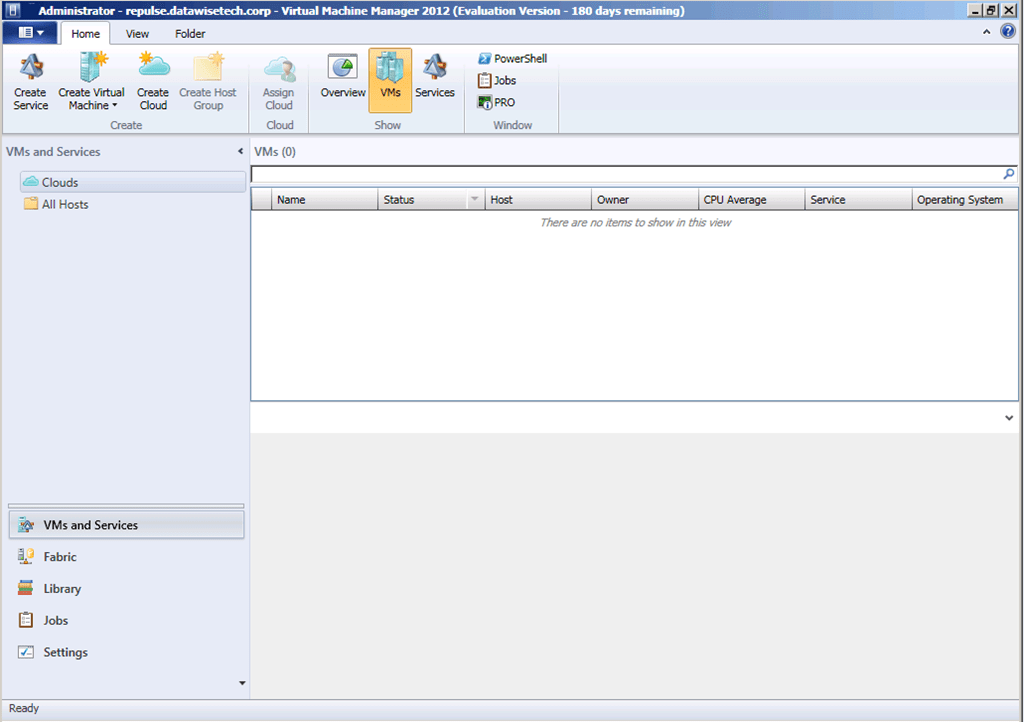

I’m happy to see that they leverage the real world experience of experts (fellow Hyper-V MVP Carsten Rachfahl) who helps hosting companies and enterprises deploy these technologies. Storage, Scale Out File Server, Hyper-V clusters, System Center and self service (Azure Pack) are the technologies used to achieve the goals of the workshop.

The smart use of PowerShell (workflows, PDT) allows to automate the process and frees up time to discuss and explain the technologies and design decisions. They take great care to explain the steps and tools used so the attendees can use these later in their own environments. Talking about their own experiences and mistakes helps the attendees avoid common mishaps and move along faster.

The fact that they have added workshops like this to the summit adds value. I think it’s a great idea that they are held on the last day as this means that attendees can put the information they gathered from 2 days of sessions into practice. This helps understanding the technologies better.

There is very little criticism to be given on the content and the way they deliver it. I have to say that it’s all very well done. Perhaps they make private cloud look a bit too easy ![]() . Bernard, Carsten, well done guys, I’m impressed. If you’re based in Germany and you or your team members need to get up to speed on how these technologies can be leveraged to modernize your data center I can highly recommend these guys and their workshops/IT Camps.

. Bernard, Carsten, well done guys, I’m impressed. If you’re based in Germany and you or your team members need to get up to speed on how these technologies can be leveraged to modernize your data center I can highly recommend these guys and their workshops/IT Camps.