Introduction

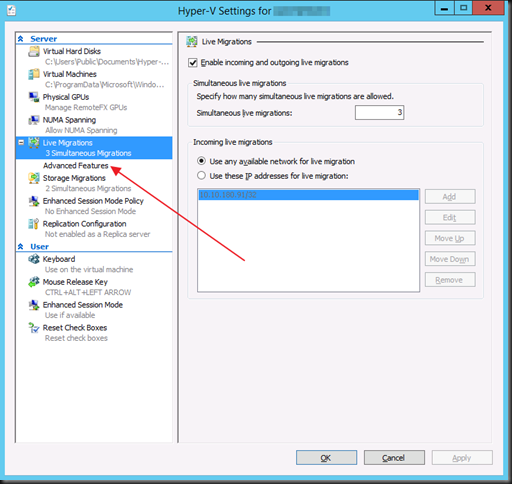

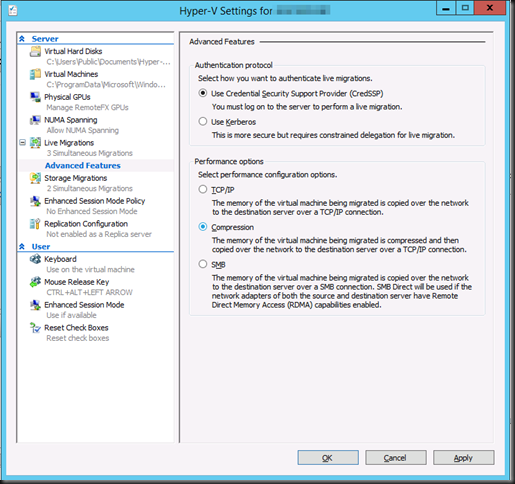

With Windows Server 2012 R2 (Preview) we can Live Migrations over TCP/IP like before. That’s either using a single NIC or by teaming two or more NICs. We also have compression and Multichannel. In this blog post we’ll play with TCP/IP and Multichannel.

- We have a dual port 10Gbps Mellanox RDMA card (RoCE) in each host. But for these tests we have disable the RDMA capabilities of these NICs. As in the RMDA blog post, one pair of the ports are interconnected via a direct attach cable. The other one is connected over a Force10 S4810 switch. We’re using in box Windows Server 2012 R2 preview drivers for everything as we have found drivers not to install properly (or not at all) on this release and cause issues.

- We are using one VM running Windows 2012 RTM with upgraded Integration Services components. This VM has 4 vCPUs and 55GB of fixed memory assigned. For this purpose we had no workload running in the VM. The servers are standard DELL PowerEdge R720 kit running the Windows Server 2012 Preview bits.

Results

No Performance tweaking

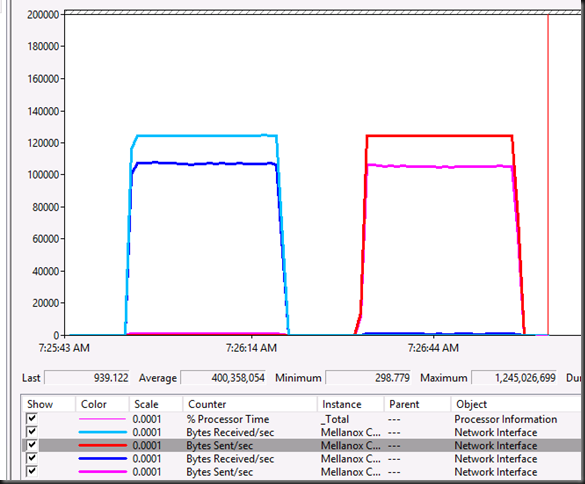

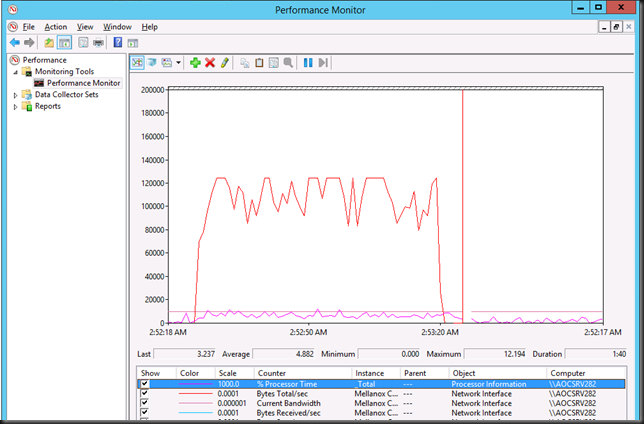

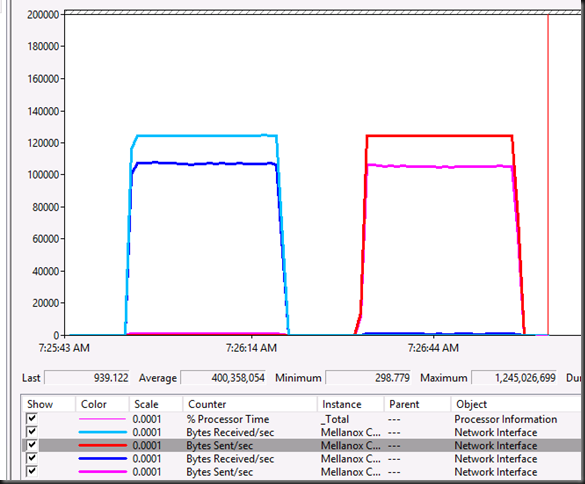

We test a a Live Migration over one 10Gbps NIC. It’s fast but I don’t like the jig saw effect and we don’t push the bandwidth to the limit yet.

We can move the 55GB Memory VM in about 70 seconds on average. You have a bit more CPU load here but nothing to bad. Most often the Hyper-V host has ample of CPU cycles left so this will not hinder performance. I also remember Aidan Finn’s work testing a truck load of concurrent live migrations with a host that has only 1 low end CPU with 4 cores making it throttle the number of CPUs it would start to save guard the workload.

So let’s do what we’ve always done. Turn on Jumbo Frames. This helps to peek to 1.25GB/s and improves speeds (10% or more) but the jig saw is still a bit visible. As I think we can do better we move in the big guns and we optimize our power setting as discussed in Still Need To Optimizing Power Settings On DELL 12th Generation Servers For Lightning Fast Hyper-V Live Migrations? and Optimizing Live Migrations with a 10Gbps Network in a Hyper-V Cluster. Now with C & C1E states disabled and both processor & memory optimized for performance we see this.

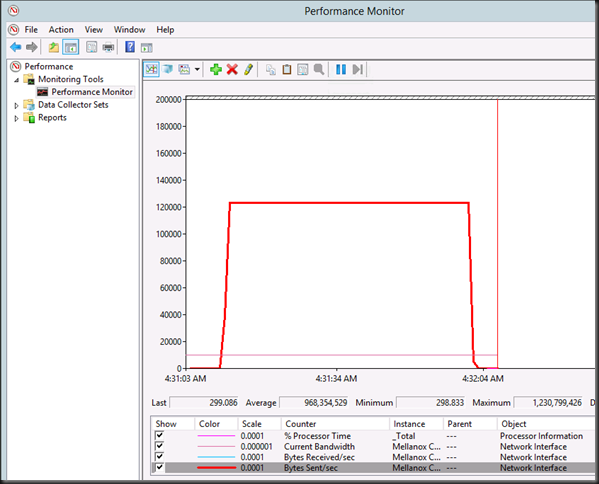

Now that’s power. We have faster Live Migrations (54 seconds on average) with top bandwidth use during the entire migration process and we see 50% better blackout times. What’s not to like here? CPU usage isn’t that bad and you’ll likely have some cycles to spare unless you’re over 60-70% of CPU use by your VMs and then you need to fix that anyway  as you’re out of the save zone. So, Jumbo Frames & Power Optimization are key!

as you’re out of the save zone. So, Jumbo Frames & Power Optimization are key!

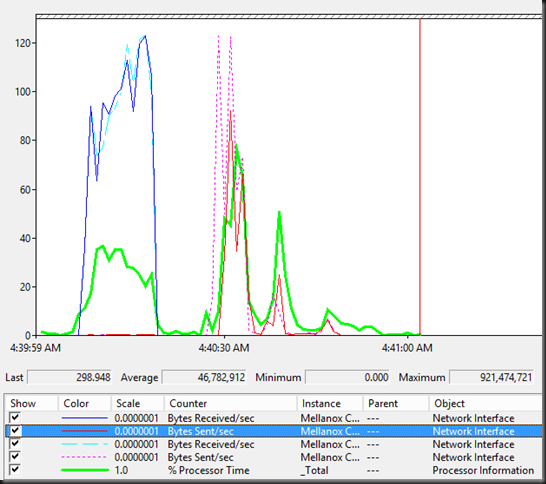

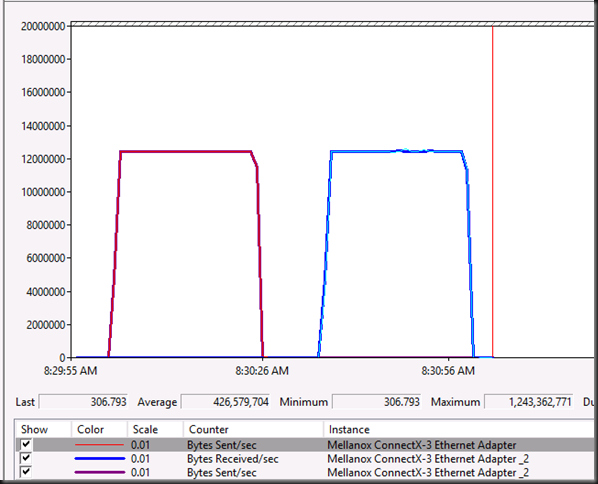

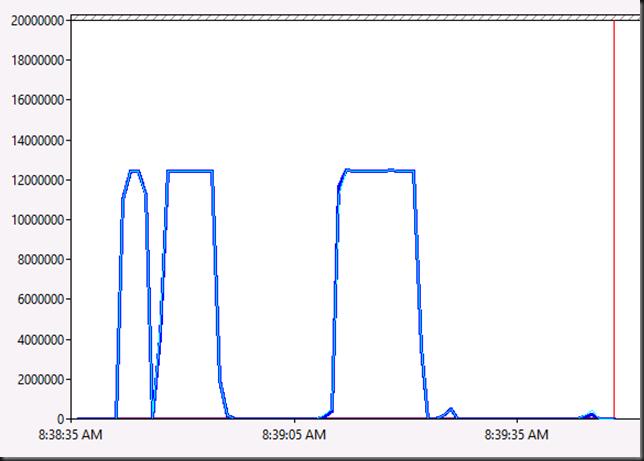

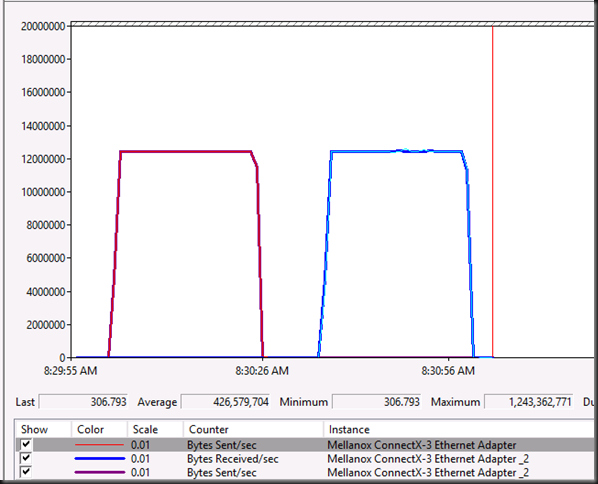

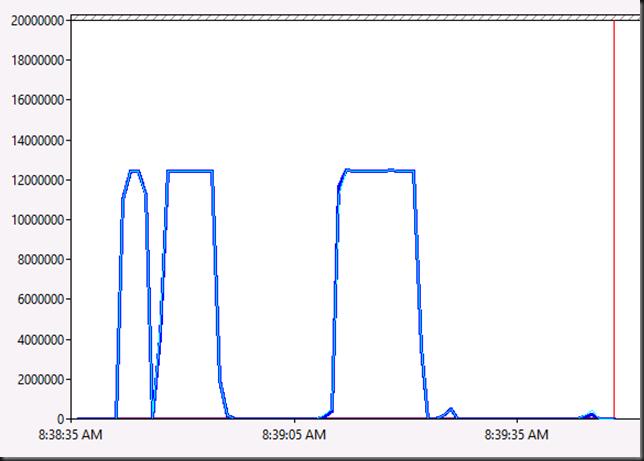

Of cause we’re always looking for better and more. In Live Migrations terms that means speed. So let’s see what Multichannel can do for us. So let’s switch to SMB. As we have disable RDMA on the NICs this “only” gives us multichannel. The cool thing is, the second NIC doesn’t have Jumbo frames enabled yet. I have always found Jumbo Frames to matter and now with multichannel I have a very nice way of demonstrating / visualizing this. Here’s a screen shot of moving our test VM back and forward. As you can see we have one NIC with Jumbo frames disabled and one with Jumbo frames enabled. You don’t have to guess which one is which I guess. Yup Jumbo frames do matter  When you push to the limits. We are getting about 31 seconds on average here with the 55GB VM.

When you push to the limits. We are getting about 31 seconds on average here with the 55GB VM.

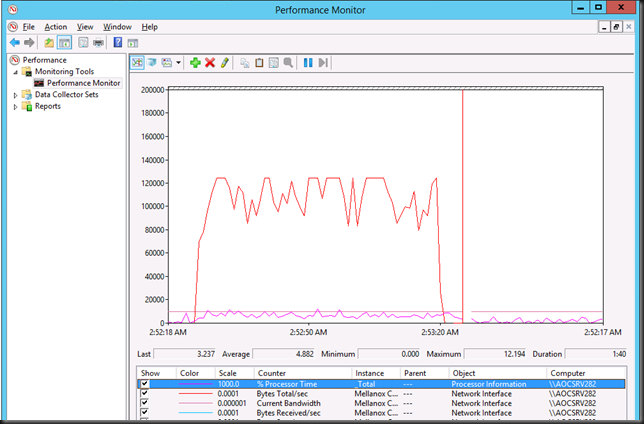

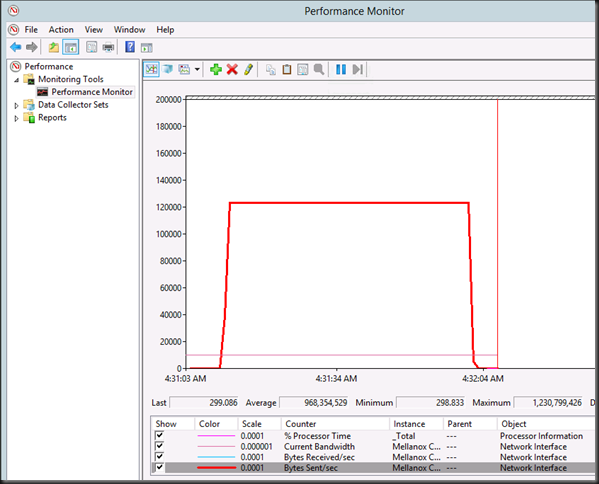

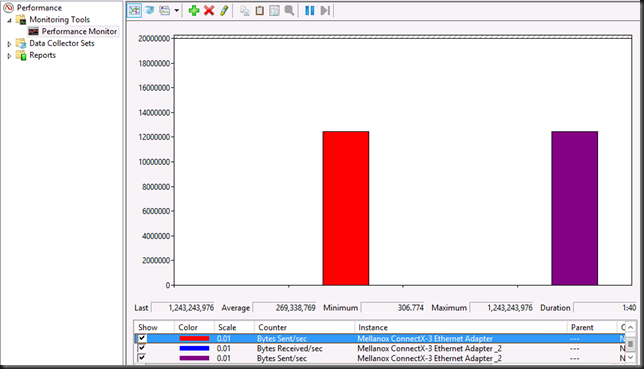

Here’s the same with Jumbo Frames enabled on both NICs. And guess what we just cut another 3 seconds of the Live Migration time  . 28 seconds flat.

. 28 seconds flat.

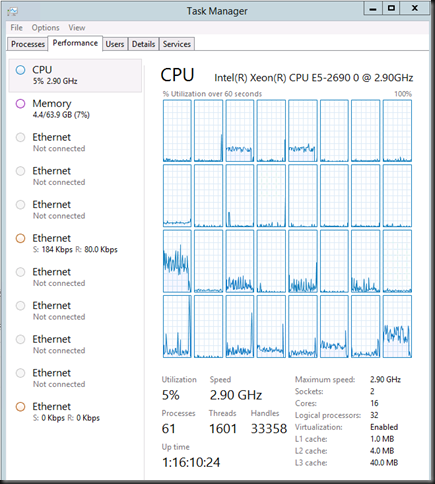

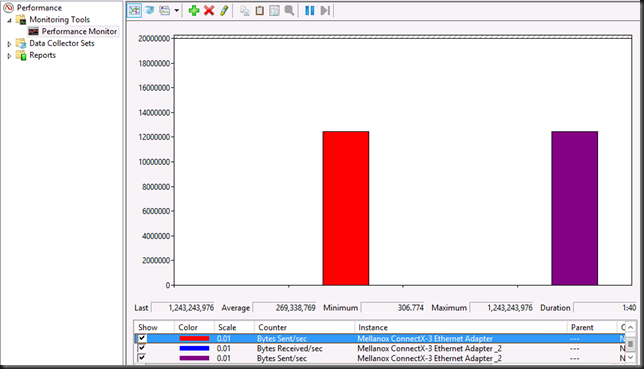

In a histogram it looks like this. That’s what maximum throughput looks like.

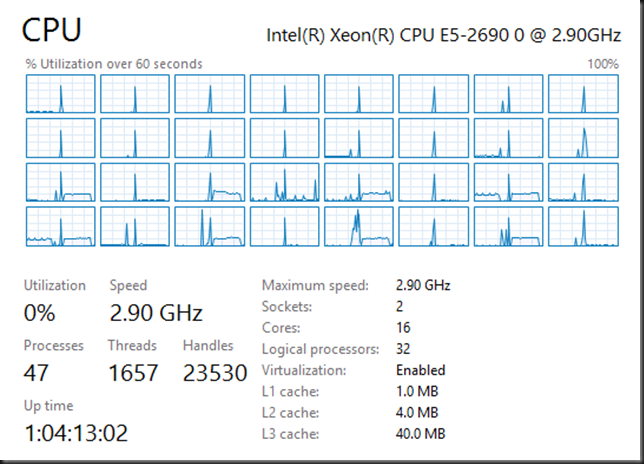

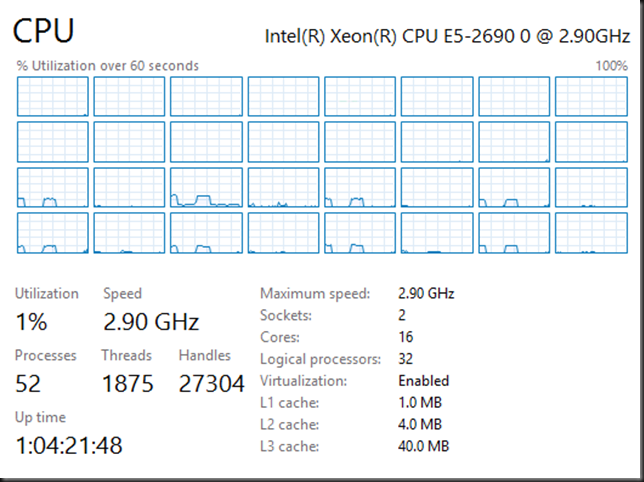

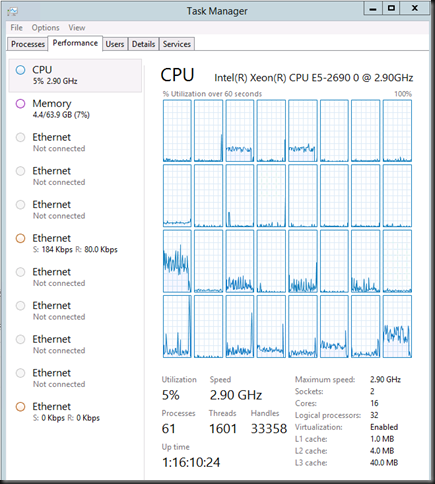

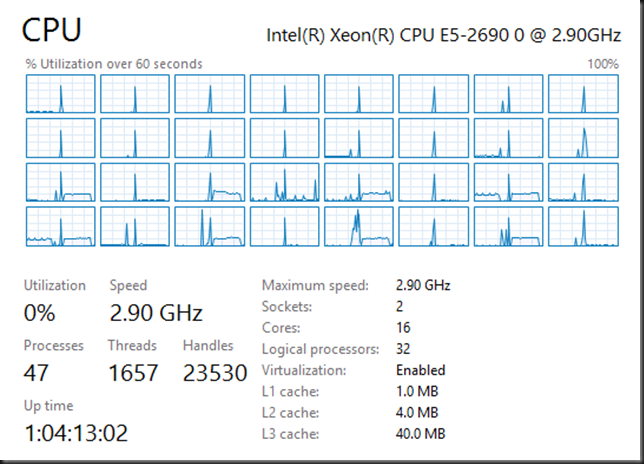

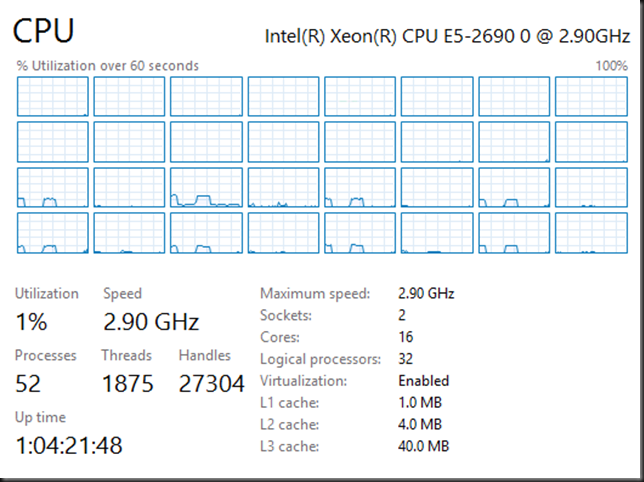

Let’s see what our CPUs are up to during all this. Some core are rather busy dealing with the interrupts. But this is just one VM.

If you wonder why with 2*10Gbps you only see 2*4 CPUs doing work while the default RSS queues are at 8 and you’d expect 16. It’s because Multichannel defaults to 4. So we get 8. This I configurable and testing will show what difference this could make and whether it’s wise to tweak. It all depends.

Sure this is only one large memory VM but what if we do more? Like 6 VMs with 9GB of memory. Not to bad.

What if that host is running 30 or 40 VMs? That adds up. Well that’s what RDMA is for  ! But that yet another blog post.

! But that yet another blog post.

Do keep in mind this is al just the Preview bits … MSFT does two things now until R2 is released. They kill bugs and tweak for speed. I tune my Live Migration setting in production so that get the most bang for the buck I try to avoid dips in bandwidth like you see above. So the work is not finished yet

Conclusion

I can conclude that all the hints & tips of the past to optimize Live Migration still hold true. Yes, you should enable Jumbo Frames and yes you should still optimize your host for performance over power savings. That said, the times that you’d only get 16% of bandwidth usage out of a 10Gbps NIC when you do power optimize have long gone ever since Windows Server 2012. But if you feel the need for (even more) speed …, then by all means go for it.

If you want to conserve energy & be environmentally sound make the most of the least number of nodes possible and use Dynamic Optimization / Power Optimization to shut them down when not needed and fire them up to rise to the occasion

Oh yes, test people, test. Trust but verify and determine the best possible configuration for both your environment and needs.

Now we’ll have a look at compression … but again that’s another blog post!