Introduction

While this post is about an Offline Azure Devops Windows 2012 R2 build server with failing builds let me talk about the depreciation of TLS 1.0/1.1. Now this is just my humble opinion, as someone who has been implementing TLS 1.3, QUIC and even SMB over QUIC. The out phasing of TLS 1.0/1.1 in favor of TLS 1.2 has been an effort done at snail’s pace. But hey, here we are, TLS 1.0/1.1 are still working for Azure Devops Services. Many years after all the talk, hints, tips, hunches and efforts to get rid of it. They did disable it finally on November 31st 2021 (Deprecating weak cryptographic standards (TLS 1.0 and TLS 1.1) in Azure DevOps)) but on January 31st 2022 Microsoft had to re-enable it since to many customers ran into issues. Sigh.

Tech Debt

The biggest reason for these issues are tech debt, i.e. old server versions. So it was in this case, but with a twist. Why was the build server still running Windows Server 2012 R2? Well in this case the developers won’t allow an upgrade or migration of the server to a newer version because they are scared they won’t be able to get the configuration running again and won’t be able to build their code anymore. This is not a joke but better to laugh than to cry, that place has chased away most good developers long ago and left pretty few willing to fight the good fight as there no reward for doing the right things, quite the opposite.

Offline Azure Devops Windows 2012 R2 build server with failing builds

But Microsoft, rightly so, must disable TLS 1.0/1.1 and will do so on March 31st 2022. To enable customers to detect issues they enabled it already temporarily on March 22nd (https://orgname.visualstudio.com)and 24th (https://dev.azure.com/orgname) form 09:00 to 21:00 UTC.

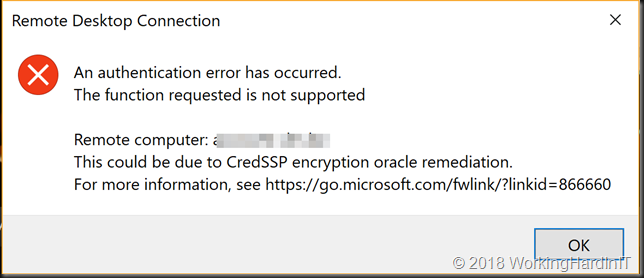

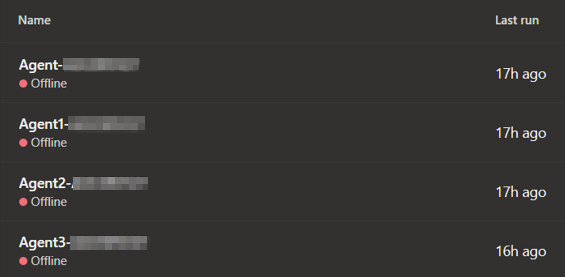

Guess what? On March 24th I got a call to trouble shoot Azure Devops Services build server issues. A certain critical on-premises build server shows as off line in Azure and their builds with a dead line of March 25th were failing. Who you going to call?

That’s right, WorkingHardInIT! Sure enough, a quick test (Invoke-WebRequest -Uri status.dev.azure.com -UseBasicParsing).StatusDescription did not return OK.

Now what made it interesting is that this Windows Server 2012R2 had been setup so that it would only support TLS 1.2 some years ago because they has issues with chipper mismatches and SQL Server (see Intermittent TLS issues with Windows Server 2012 R2 connecting to SQL Server 2016 running on Windows Server 2016 or 2019). So why was it failing and why did it not fail before?

Windows Server 2012 R2 with Azure Devops Services from March 31st 2022

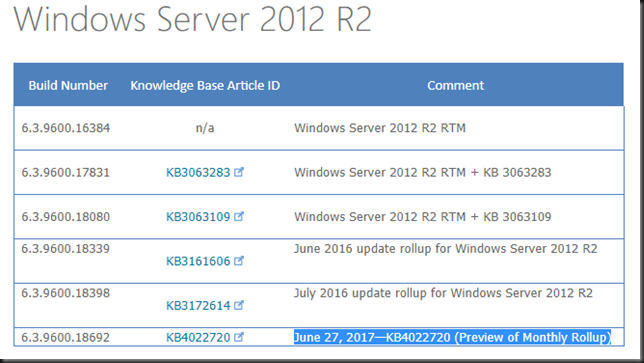

To run Windows Server 2012 R2 with Azure Devops Services from March 31st 2022 there are some requirements listed in Deprecating weak cryptographic standards (TLS 1.0 and 1.1) in Azure DevOps Services.

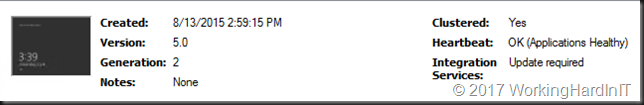

Well first of all that server only had .NET 4.6 installed. .NET 4.7 or higher is a requirement after March 31st 2022 for connectivity to Azure Devops Services.

So, I checked that there were (working backups) and made a Hyper-V checkpoint of the VM. I then installed .NET 4.8 and rebooted the host. I ran (Invoke-WebRequest -Uri status.dev.azure.com -UseBasicParsing).StatusDescription again, but no joy.

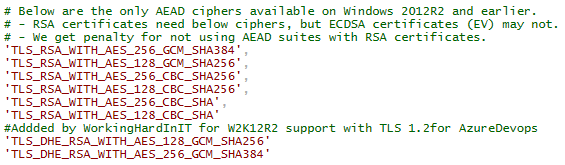

There is another requirement that you must pay extra attention to, the enable ciphers! Specifically for Windows Server 2012 R2 the below cipher suites are the only two that will work with Azure Devops Services.

- TLS_DHE_RSA_WITH_AES_256_GCM_SHA384

- TLS_DHE_RSA_WITH_AES_128_GCM_SHA256

On that old build server they were missing. Why? We enforced TLS 1.2 only a few years back but the PowerShell script used to do so did not enable these chippers. The code itself is fine. You can find it at Setup Microsoft Windows or IIS for SSL Perfect Forward Secrecy and TLS 1.2 | Hass – IT Consulting.

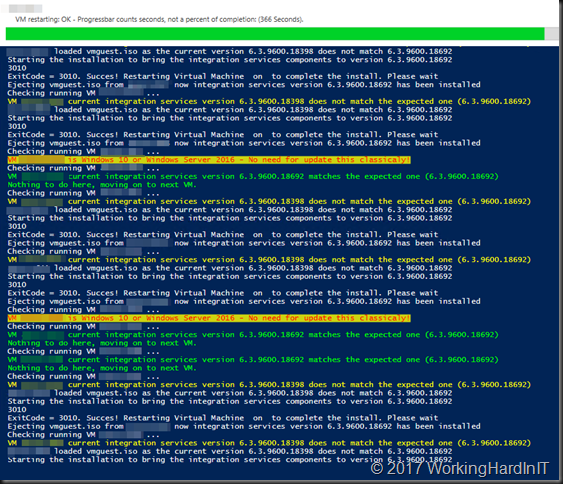

But pay attention to the part about the AEAD ciphers that are only available on Windows Server 2012 R2. The above to ciphers are missing and I added them.

Add those two ciphers to the part for Windows Server 2012 R2. and run the script again. That requires a server reboot.Our check with (Invoke-WebRequest -Uri status.dev.azure.com -UseBasicParsing).StatusDescription returns OK. The build server was online again in Azure Devops and they could build whatever they want via Azure Devops.

Conclusion

Tech debt is all around us. I avoid it as much as possible. Now, on this occurrence I was able to fix the issue quite easily. But I walked away telling them to either move the builds to azure or replace the VM with Windows Server 2022 (they won’t). There are reasons such a cost, consistent build speed to stay with an on-prem virtual machine. But than one should keep it in tip top shape. The situation that no one dares touch it is disconcerting. And in the end, I come in and do touch it, minimally, for them to be able to work. Touching tech is unavoidable, from monthly patching, over software upgrades to operating system upgrades. Someone needs to do this. Either you take that responsibility or you let someone (Azure) do that for you.