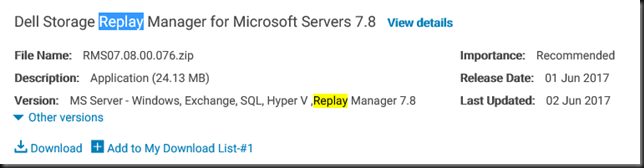

Compellent Replay manager 7.8 Windows Server 2016 Clusters in mixed mode or at cluster functional lever 8

Consider this a a quick publish about tips for when you combine Replay Manager 7.8, Compellent and Windows Server 2016. Many of you will be doing cluster operating system rolling upgrade of your Windows Server 2012 R2 clusters to Windows Server 2016. If you have done your homework and made sure your hardware is supported you can still run into a surprise. As long as your in mixed mode (Wi2K12R2 mixed with W2K16 nodes) or have not updated the cluster functional level to 9 (Windows Server 2016) you will have a few issues.

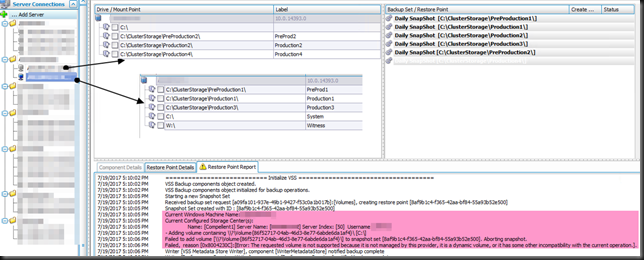

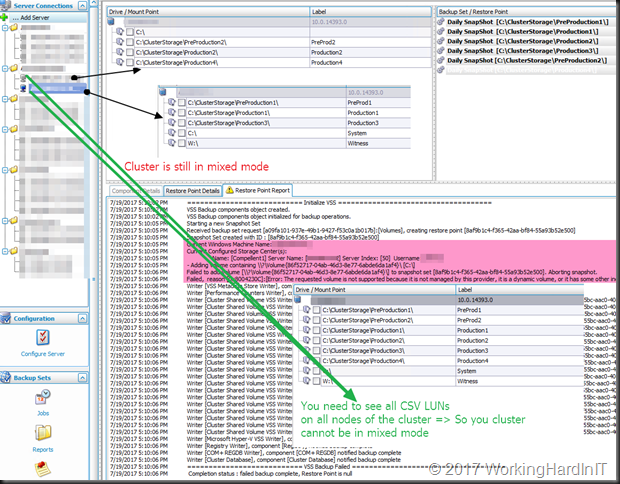

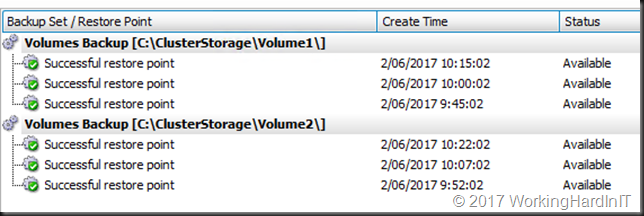

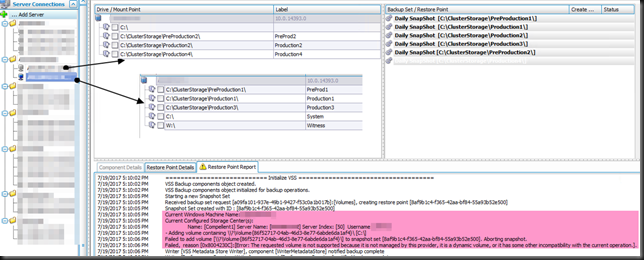

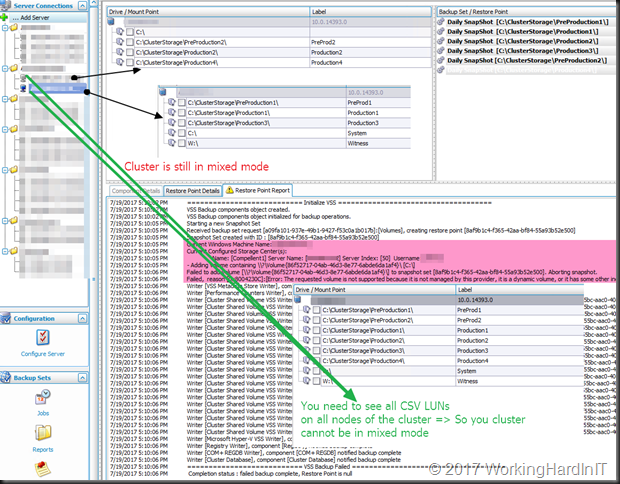

In Replay Manager 7.8 itself you’ll notice that the nodes of your cluster only see the CSV LUNs under local volumes that they are the owner of currently. Normally you’ll see all of the CSV LUNs of the (Hyper-V) cluster on all of the nodes of that cluster. So that’s not the expected behavior. This leads to failed restore points when you run a snapshot from a host that is not the owner of the CSV etc.

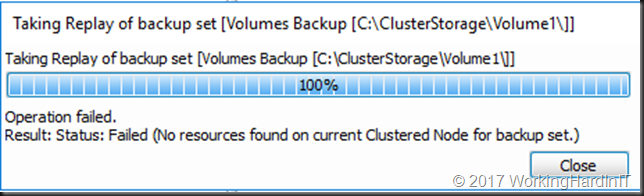

On top of that when you try to run a backup job it will fail. The reason given is:

The requested volumes is not supported because it is not managed by the provider, is a dynamic volume, or it has some other incompatibility with the current operation.

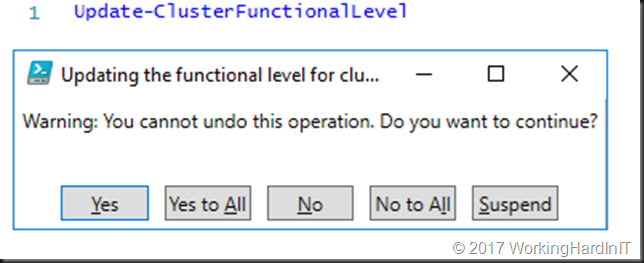

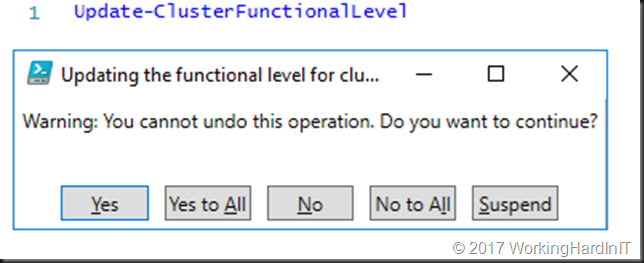

The fix? Just update your upgrade cluster to cluster functional level (level 9)

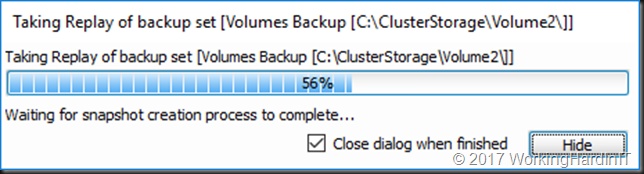

It’s as easy as that. The moment you upgrade your cluster functional level to 9 you will see all the CSV on the cluster on every node of that cluster you connect to. At that moment the replays will also work. That’s OK, you want to move swiftly trough the rolling upgrade and once you’re comfortable all drivers and firmware are working fine. You do not want to be in a the lower cluster version too long, but upgrade to benefit from the new capabilities in Windows Server 2016 Failover clustering. You do need to know this when you start your upgrades

Close your backups apps, restart the Replay manager service on the cluster nodes, refresh / reconnect to the backup apps, and voila. You’ll see the image you are use to in Replay Manager 7.8 (green text / arrows) and the backup jobs will work as well as any other backup product using the Compellent Replay Manager 7.8 hardware VSS provider.

I hope this helps some of you out there. So yes Replay Manager 7.8 supports Windows Server 2016 Clusters with CSV LUNs but if you upgraded your cluster via cluster operating system rolling upgrade you need to have upgraded your cluster functional level! Until then, Replay Manager 7.8 isn’t going to work very well.

So there you go, that’s another reason to move through that process fast and smooth as you can.

Still missing in action for Hyper-V with Replay Manager 7.8

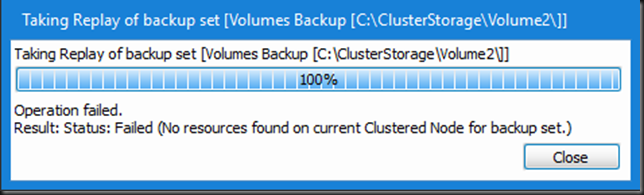

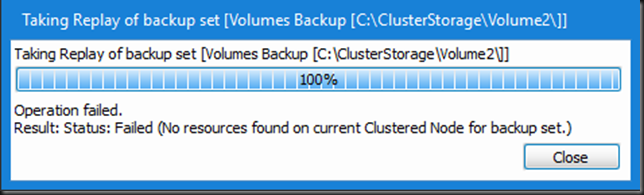

I’d really like for Replay Manager to be a bit more cluster friendly. No matter what node you are connected to they show you all CSV LUNs in the cluster. Since Replay manager 7.8 with Windows Server 2016 when you run a job manually you must start it when connected to the cluster node that owns the CSV or the job will fail with “No resources found on current cluster node for backup set”.

This was not the case with Windows Server 2012(R2) and earlier versions of Replay Manager. That did throw some benign errors in the event logs on the cluster node but it did work. I would love for DELLEMC to make sure the Replay Manager Client is smart enough to detect who owns the CSV and make sure it’ starts the job from that node. That would be a lot more user friendly. At the very least it should indicate which of the CSV LUNs you see are owned by the cluster node you are connected to.But when launching a backup job for a CSV that’s not owned by the node you are connect to the job quits/fails. They can detect the node they need, launch the job on that node and show it to you. That avoids having to go find out yourself what cluster node to connect to in Replay manager when you need to run a out of schedule job manually? The tech/logic is already there as the scheduled jobs get launched on the correct node.

It would also be great if they finally could get the logic built into Replay manager for the Hyper-V VM backups to know on what CSV and Hyper-V node the VM lives and deal with that. Sure it might cause more more snapshots to be made but that’s an invalid argument. When the VMs are on the same node,but different CSV’s that’s already happening. Really on VM per job to avoid this isn’t a great answer.