There, I said it. In switching, just like in real life, being independent often beats the alternatives. In switching that would mean stacking. Windows Server 2012 NIC teaming in Independent mode, active-active mode makes this possible. And if you do want or need stacking for link aggregation (i.e. more bandwidth) you might go the extra mile and opt for vPC (Virtual Port Channel a la CISCO) or VTL (Virtual Link Trunking a la Force10 – DELL).

What, have you gone nuts? Nope. Windows Server 2012 NIC teaming gives us great redundancy with even cheaper 10Gbps switches.

What I hate about stacking is that during a firmware upgrade they go down, no redundancy there. Also on the cheaper switches it often costs a lot of 10Gbps ports (no dedicated stacking ports). The only way to work around this is by designing your infrastructure so you can evacuate the nodes in that rack so when the stack is upgraded it doesn’t affect the services. That’s nice if you can do this but also rather labor intensive. If you can’t evacuate a rack (which has effectively become your “unit of upgrade”) and you can’t afford the vPort of VTL kind of redundant switch configuration you might be better of running your 10Gbps switches independently and leverage Windows Server 2012 NIC teaming in a switch independent mode in active active. The only reason no to so would be the need for bandwidth aggregation in all possible scenarios that only LACP/Static Teaming can provide but in that case I really prefer vPC or VLT.

Independent 10Gbps Switches

Benefits:

- Cheaper 10Gbps switches

- No potential loss of 10Gbps ports for stacking

- Switch redundancy in all scenarios if clusters networking set up correctly

- Switch configuration is very simple

Drawbacks:

- You won’t get > 10 Gbps aggregated bandwidth in any possible NIC teaming scenario

Stacked 10Gbps Switches

Benefits:

- Stacking is available with cheaper 10Gbps switches (often a an 10Gbps port cost)

- Switch redundancy (but not during firmware upgrades)

- Get 20Gbps aggregated bandwidth in any scenario

Drawbacks:

- Potential loss of 10Gbps ports

- Firmware upgrades bring down the stack

- Potentially more ‘”complex” switch configuration

vPC or VLT 10Gbps Switches

Benefits:

- 100% Switch redundancy

- Get > 10Gbps aggregated bandwidth in any possible NIC team scenario

Drawbacks:

- More expensive switches

- More ‘”complex” switch configuration

So all in all, if you come to the conclusion that 10Gbps is a big pipe that will serve your needs and aggregation of those via teaming is not needed you might be better off with cheaper 10Gbps leverage Windows Server 2012 NIC teaming in a switch independent mode in active active configuration. You optimize 10Gbps port count as well. It’s cheap, it reduces complexity and it doesn’t stop you from leveraging Multichannel/RDMA.

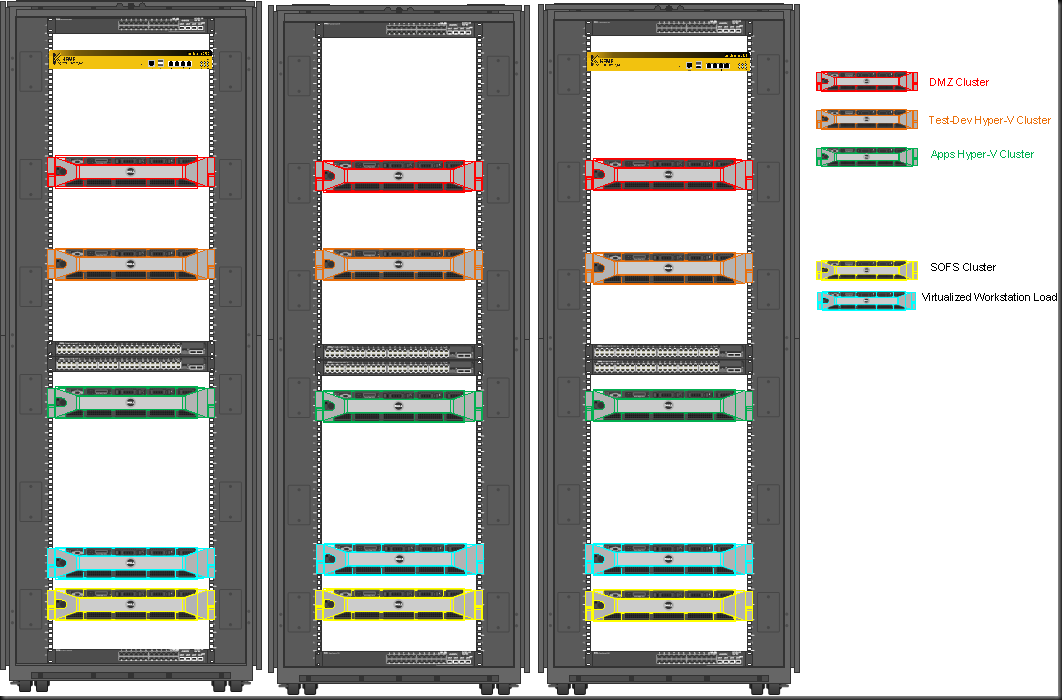

So right now I’m either in favor of switch independent 10Gbps networking or I go full out for a vPC (Virtual Port Channel a la CISCO) or VTL (Virtual Link Trunking a la Force10 – DELL) like setup and forgo stacking all together. As said if you’re willing/capable of evacuating all the nodes on a stack/rack you can work around the drawback. The colors in the racks indicate the same clusters. That’s not always possible and while it sounds like a great idea, I’m not convinced.

When the shit hits the fan … you need as little to worry about as possible. And yes I know firmware upgrades are supposed to be easy and planned events. But then there is reality and sometimes it bites, especially when you cannot evacuate the workload until you’re resolved a networking issue with a firmware upgrade ![]() Choose your poison wisely.

Choose your poison wisely.

Your idea of independent switches is nice, unless shit hits the fan with L2 redundancy by means of Spanning Tree for instance 🙂

So I’d prefer stackable switches with in service software upgrade (ISSU) like HP A5800 series with their IRF stack.

I have several stacks and performed several ISSU with no major problems.

Please elaborate. http://blog.ioshints.info/2011/01/intelligent-redundant-framework-irf.html He’s not convinced 😉 All,joking aside, IRF is more an other way of doing thing compared to vPC or VLT. Stacking in itself offers no protection against Spanning Tree. Simple, good network design and technologies like vPC & VLT do, just as IRF has it’s place.

Pingback: Microsoft Most Valuable Professional (MVP) – Best Posts of the Week around Windows Server, Exchange, SystemCenter and more – #21 - Flo's Datacenter Report

Pingback: Microsoft Most Valuable Professional (MVP) – Best Posts of the Week around Windows Server, Exchange, SystemCenter and more – #21 - TechCenter - Blog - TechCenter – Dell Community

Pingback: Microsoft Most Valuable Professional (MVP) – Best Posts of the Week around Windows Server, Exchange, SystemCenter and more – #21 - Dell TechCenter - TechCenter - Dell Community

Pingback: Server King » Dell’s Digest for March 25, 2013

10GB switches have drop a factor 10 last month. Netgear ProSafe Plus XS708E costs less than €100/10GB port.

And we’re seeing switches with a smaller number of ports making it easier to avoid overspending in smaller environments.

You say here that using tNIC (independent) “doesn’t stop you from using SMB multichannel/RDMA”. I’ve read contradictory info regarding RDMA/tNICs as being not possible. Teaming RDMA NICs you lose RDMA ? For example here:http://blogs.technet.com/b/josebda/archive/2013/03/30/q-amp-a-i-only-have-two-nics-on-my-hyper-v-host-should-i-team-them-or-not.aspx

To me it makes sense for the ones saying impossible, since RDMA bypasses the entire stack like SR-IOV does, requiring DCB for QoS as anything in between, vSwitch or tNIC, is bypassed… Is this the case or does it depend no the RDMA NICs used perhaps, since some say, like above, it is possible ?

Who says switch independent means a tNic? I sure never did, I’m not teaming 🙂 You can do switch independent for the vSwitch/guest access team and then do RDMA on the non teamed other NICs. No teaming there.

Pingback: Design Considerations For Converged Networking On A Budget With Switch Independent Teaming In Windows Server 2012 Hyper-V | Working Hard In IT